Tag: Techno utopians

-

A brief keynote to Westminster Digital Forum

by

–

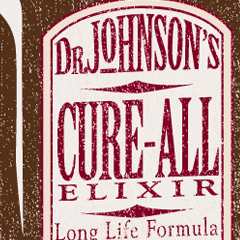

My name is Andrew Orlowski from The Register, I was looking for an illustration to try and bring a very old debate to have a fresh perspective, and I came across this in my library, which is an extraordinary book written by a gentleman called Yoneji Masuda. The book was written in 1980 and it…

-

Tim Kring

by

–

The audience are the actors in writer Tim Kring’s latest adventure. In his famous creation, the TV show Heroes, people discover they have superhero powers, and go off and battle Evil. In his latest, people go and battle Evil, and discover they have been given Nokia smartphones. The ambitious, Nokia-sponsored interactive extravaganza began this weekend,…